Main aim of the study

Collect data on project characteristics that cannot be answered by visiting project websites.

Period addressed by the study

The promotion of the survey started in December 2020 and was paused in January 2021 to avoid overlap with the survey in CS Track’s Work Package 4 and to solve technical problems with the survey software. It was still possible to respond, but since the end of January 2021 no effort was made to promote the survey. The deadline for answering the survey was 18 February 2021.

Research questions

The research questions were:

- What do organisers of citizen science know about participants in their projects? Are they confident to estimate gender, age and social situation?

- How do academic disciplines, attributed to a project, match research expertise in the team of project organisers?

- Are there other response behaviour & response patterns of interest in a survey with a very short questionnaire?

Research Context

It already became evident that essential information on citizen science activities is not easy to find. Thus, the focus lay on issues that were often missing on websites.

Research Methods applied

Online survey targeting project owners, and responsible coordinators of citizen science projects. The survey was limited to very few questions about the respective project and those who participate(d) in it, when this information was not available online:

- The project objectives

- The scientific disciplines involved in the project

- The type(s) of citizen science activities

- Rough estimates on the participation of different social groups, including their gender and age distributions,

- Questions on practical issues, such as the availability of the respective project for further research.

Most questions are aimed at project organisers’ estimates of the numbers or characteristics of participants in their projects. Citizen science project organisers were targeted without pre-selection.

Procedures applied

The survey consisted of ten questions, mainly tick-box questions, to avoid a barrier for smaller projects with little or no funding. All questions but question 1 were optional ones. The obligatory first question identified a project without a doubt.

The remaining nine questions were on estimations of organisers about participants. Time resources are presumably low if there are no employees who can fill in lengthy questionnaires. Additionally, those projects that are keener on being part of citizen science networks would be more inclined to fill in a questionnaire and thus further distort the picture.

For the survey, it was decided to use LimeSurvey because of privacy issues.

The survey was promoted by Twitter messages and a blogpost on Österreich forscht, the online platform of Citizen Science Network Austria, at the beginning of January 2021. In December 2020 consortium partners promoted the survey in scientific mailing lists and by contacting research and higher education institutions by email directly.

Promotion messages contained a link to the CS Track website.

Summary of results/findings

Completed responses to the survey: 56.

Only three languages were used to answer the questionnaire: English (n=42), German (n=10) and Greek (n=4). Often English was used in spite of the availability of a language version that matched the official languages of the location of the projects. In 50 cases the language version that was accessed initially was the English one, in six cases it was the German one. This does not indicate the language the questionnaire was finally filled in, as respondents could switch to another language version. It is interesting insofar as the questionnaire was sent out by different partners, with respective links to different language versions.

On geographic regions and sites: Where the responding citizen science projects take place is not clear at first sight. Email addresses or domain names do not always localise the projects reliably. It is necessary to visit the project website. Then one finds in most cases, but not in all, a clear-cut answer. The location of the project organisation and geographic outreach of projects were researched separately.

Summary of goals: The project organisers referred to projects showing a broad range of activities, settings, goals, involvement intensities, etc. Several projects had objectives related to biodiversity, the environment and/or a combination of both, but in different ways. This had to be expected.

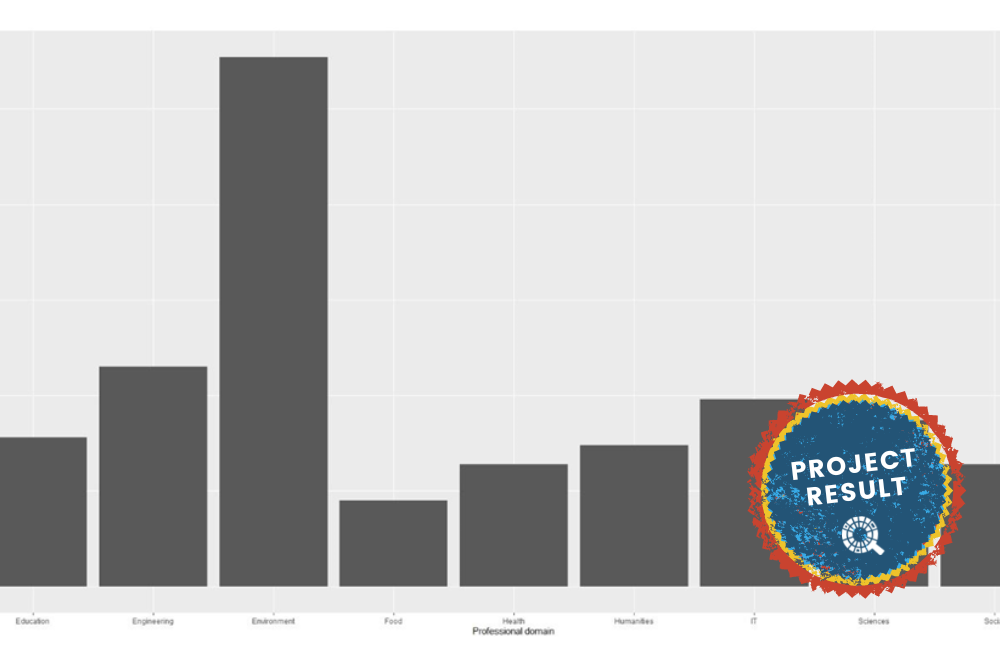

Disciplines in the diverse teams: Of the 56 analysed responses, 53 gave an answer to the question while 3 respondents skipped it. Those who answered named 1 to 5 disciplines for their project team, which resulted in a total of 162 entries. Each of these entries was manually allocated to both Web of Science (WoS) subareas and the Frascati Manual (FOS) classifications as good as possible.

According to the 6 main categories of the Revised Field of Science and Technology classification in the Frascati Manual, natural scientists were most strongly represented. A first check did not show an obvious mismatch of disciplines in the organisation teams and the WoS-based classification of research areas of the projects named in the CS Track project database. But this may be more easily answered for disciplines in the technical and natural sciences than for the social sciences and the humanities. For the latter, there may be major differences between science traditions and curricula in different parts of the world. Furthermore, some of the mentioned methods, like participatory action research, may in some contexts need some group dynamic and almost therapeutic psychological knowledge, which cannot be followed up with a questionnaire.

The number of participants was roughly estimated by respondents: All 56 respondents gave an estimate of the number of participants at the project start. 55 respondents gave an estimate of the number of participants at the time of responding to the survey or when the project ended.

Asking for two estimates gives a safer impression of the project size than asking for only one as projects can change considerably over time. The answers mirror the very broad range of the size of such projects that one can find in literature as well.

Estimations of gender distribution: 47 respondents gave a rough estimation of how many percent of the participants would be male, female or of diverse/another gender. While most of the respondents indicated a rough gender balance, there are a few projects that involve mostly men or women. The percentage of diverse/other gendered participants was estimated in 7 cases.

Estimations of age distribution: 45 responses to this question. There are only three projects which indicate 100% for one age group, namely below 18 years. The youngest age group is also highly dominant in 4 additional projects (80% or more of the participants are estimated as being younger than 18 years old) and moderately dominant in another project (65%). The second-youngest group (18 – 35 years) is seen as very present, too: They are estimated between 65% and 95% of the participants by 4 organisers. On the other side of the spectrum, we find 2 respondents who estimate that 75% of their projects’ participants are older than 60 years.

Estimations of the professional status of participants: 45 respondents gave feedback to this question, and it most likely could only be answered if a project is targeted to a specific group (i.e. pupils, students) or if the project is small enough that people know each other quite well.

Response patterns: The authors had expected to see a stronger connection between the number of participants and organisers’ tendency to give rough estimations of their characteristics. As expected, almost all responding organisers of projects with less than 21 participants answered Questions 7 – 9. It is plausible that in a smaller project those involved know each other personally. But we also see a surprisingly high number of estimations from very large projects (more than 1000 participants) who made a rough estimation.

The answers can neither be regarded as representative of projects that consider themselves citizen science nor can be safely assumed that they cover the whole spectrum of possible citizen science activities.

Conclusion

Not too many organisers of citizen science answered the questionnaire. As the questionnaire was very short and would have taken only a few minutes to answer, it is safe to assume that not all non-respondents could answer the questions. There may be several reasons for this, which would merit more research.

In line with the authors’ research so far (e.g., Strähle, Urban et al., 2021), the survey showed a potential indication that many projects do not know very much about the participants, their characteristics or even their number (or do not want to admit to it) and refrain from answering. In view of the benefits that several scholars, practitioners, policymakers and others claim citizen science brings with it, this would make some of them unfounded if not even implausible. Moreover, an attempt was made to investigate – in cases where academics were among the organisers – how far their expertise match(ed) the research areas of the projects. This proved exceptionally tricky because there exists no classification scheme which mirrors the broad variety of academic education in different regions.

Link to dataset

Link to the report: https://zenodo.org/record/6865659.

Link to dataset: https://zenodo.org/record/7310071

References

Eitzel, M. V., et al. (2017). Citizen Science Terminology Matters: Exploring Key Terms. Citizen Science: Theory and Practice, 2(1), 1. https://doi.org/10.5334/cstp.96.

European Commission (2018). Horizon 2020: Work Programme 2018–2020: 16. Science with and for Society.

Heigl, F., & Dörler, D. (2017). Public participation: Time for a definition of citizen science. Nature 551: 168. https://doi.org/10.1038/d41586-017-05745-8.

Kullenberg C., & Kasperowski D. (2016). What Is Citizen Science? – A Scientometric Meta-Analysis. PLoS ONE 11(1): e0147152. doi:10.1371/journal.pone.0147152.

OECD Working Party of National Experts on Science and Technology Indicators (2007). Revised Field of Science and Technology (FoS) Classification in the Frascati Manual. DSTI/EAS/STP/NESTI(2006)19/FINAL. https://www.oecd.org/science/inno/38235147.pdf.

Riesch, H., & Potter, C. (2014). Citizen science as seen by scientists: Methodological, epistemological and ethical dimensions. Public Understanding of Science 23(1) 107- 120.

Strähle, M., Urban, C. et al. (2021). Framework Conceptual Model D1.1. Zenodo. https://doi.org/10.5281/zenodo.5589618.

Strähle, M., Urban, C. et al. (2022). Conceptual Framework for Analytics Tools D1.2. Zenodo. https://doi.org/10.5281/zenodo.6045639.

Strähle, M., & Urban, C. (2022, forthcoming). The Activities & Dimensions Grid of Citizen Science. In: Proceedings of Science. PoS(CitSci2022)087. https://pos.sissa.it/418/087/.

Photo by RODNAE Productions on Pexels.